There’s a phrase oncologists love: “Trust, but verify.” In today’s clinical research, “verify” is having a major renaissance—thanks to probabilistic data linkage via tokenization. If you’re still using Excel macros and post-its for patient matching, do let us know your favorite flavor of chaos!

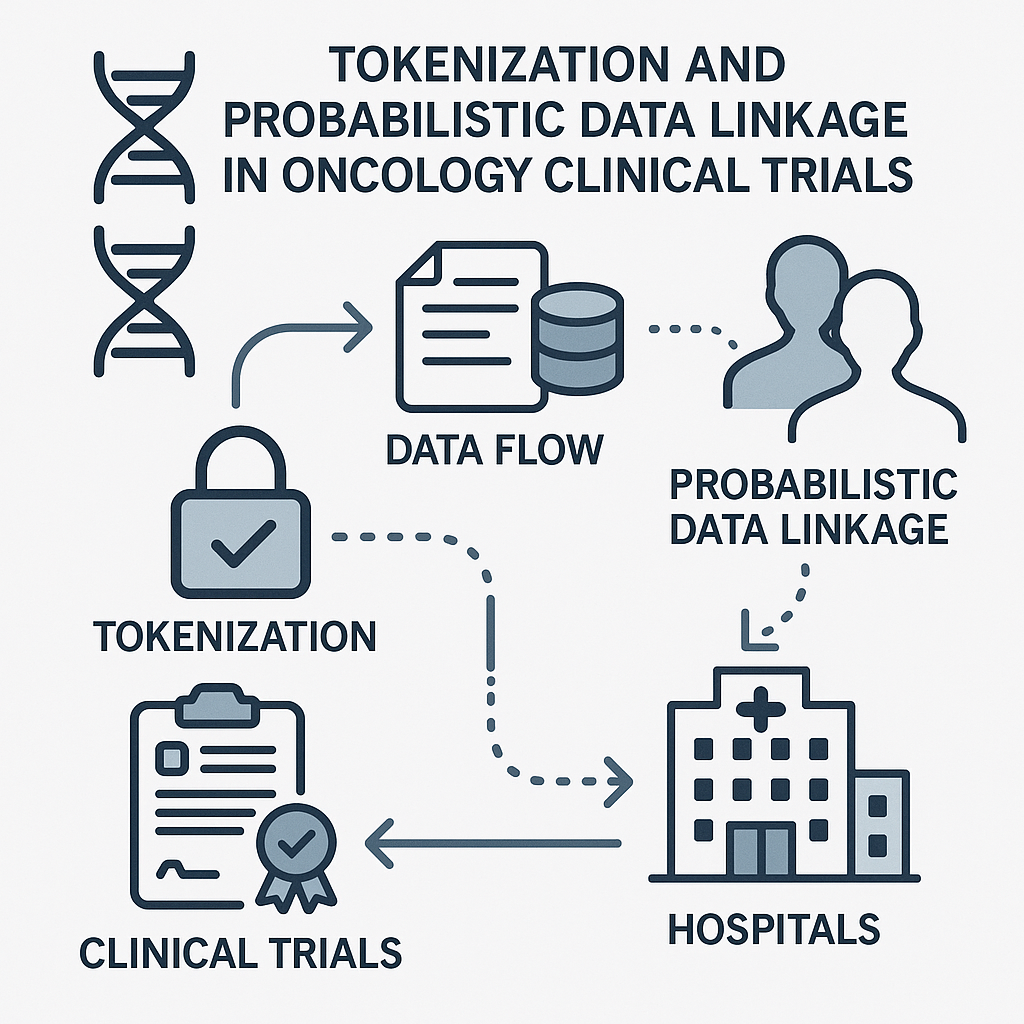

Probabilistic data linkage with tokenization is the art (and science) of connecting patient records across clinical trials, EHRs, pharmacy data, and claims—without ever exposing a single bit of personally identifiable information (PII). Instead, we hash PII into tokens, making sure privacy lawyers sleep well at night and data can actually move across institutional silos.

But let’s drop the technical jargon—what matters is whether this actually works and why the future is bright (unlike most hospital coffee).

One landmark example is the linkage of oncology trial participants with national claims datasets in the US and Europe. Using tokenized identifiers (based on combinations of birth date, sex, and hashed name), sponsors have followed patients well beyond the sterile world of the clinical trial, capturing survival, real-world disease progression, and late toxicity. In one major US radiopharmaceutical trial, tokenization enabled the identification of post-trial care patterns—clarifying which patients accessed further therapies, palliative care, or underwent hospitalizations that would have been missed using trial data alone. The result? Regulators and payers finally saw what happens after you close out that database, and it wasn’t just more spreadsheets.

France led an example wherein hospital pharmacy dispensing was token-linked to national health insurance data for cancer drugs. This allowed the government to set up outcomes-based reimbursement (risk-sharing agreements), where manufacturers only got paid in full if real-world outcomes matched trial promises. No more “take our word for it;” every Euro was tied to patient benefit—verified independently and securely. It’s enough to make your finance department positively giddy.

Forget running yet another control arm for every indication. Relying on token-linked EHR and registry data, some sponsors have now constructed synthetic external control groups with enough power and rigor to satisfy not only payers, but also...wait for it...regulators. This enables faster studies, better patient selection, and less ethical soreness from exposing patients to less effective therapies.

There’s a reason tokenization is moving from proof-of-concept to best practice. It’s not a panacea—success depends on data quality, governance, and a smidge of technical wizardry—but its track record is real, and its impact on value, speed, and credibility for oncology drug development is clear.

So next time someone asks, “How are we bridging the evidence gap?”, you can cite probabilistic linkage and tokenization. Or you can make a joke about Schrödinger’s patient: simultaneously randomized and lost to follow-up, unless observed.

Either way, in oncology R&D, the future is bright, hashed, and probabilistically matched.